courtesy @self

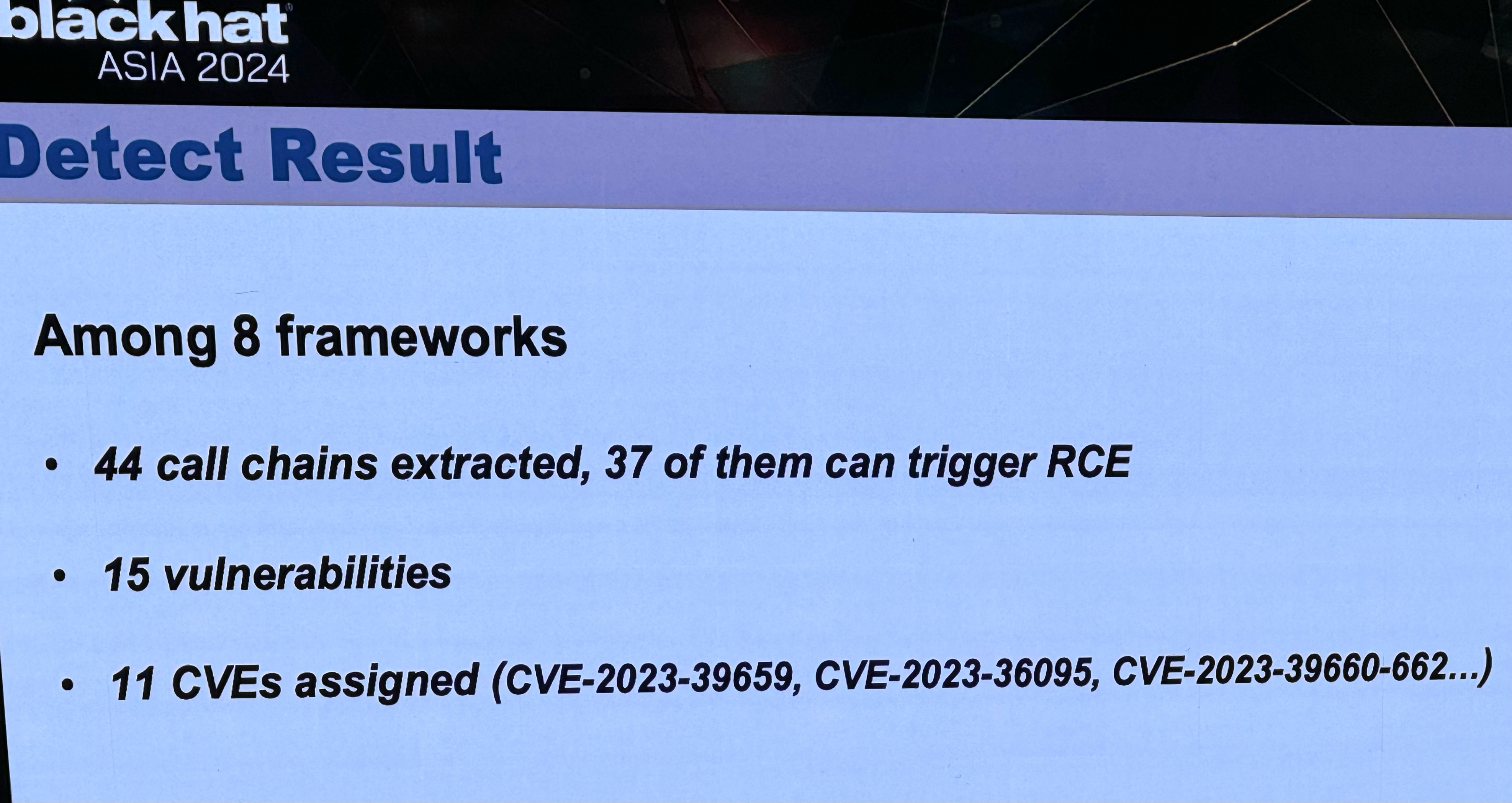

- preprint: https://arxiv.org/pdf/2309.02926

- blackhat abstract: https://www.blackhat.com/asia-24/briefings/schedule/index.html#llmshell-discovering-and-exploiting-rce-vulnerabilities-in-real-world-llm-integrated-frameworks-and-apps-37215

- Tong Liu’s related research: https://scholar.google.com/citations?hl=en&user=egWPi_IAAAAJ

can’t wait for the crypto spammers to hit every web page with a ChatGPT prompt. AI vs Crypto: whoever loses, we win

I’d argue it’s not the job of the AI researchers, I’d say for this it’s more on the devs and engineers that built all the support for the AI to bring it to production. So basically the UI, the underlying hardware, OS, VMs etc.

all of the developers I know at AI-related startups identify as researchers, regardless of their actual role

no, let’s not blame unaffiliated systems engineers for this dumb shit, thanks

Oh, yea sorry I forgot AI models actually run in a vacuum and needs no supporting code or infrastructure to make it usable to the average user so it doesn’t even need non-AI best security practices! Process isolation? OS hardening? Pfft who needs it

i wouldn’t touch the llm stuff with a barge pole unless i was expressly told to do so, and if i’ve been told to do it, i’d look for another employer (which i’m currently doing, for tangentially-related reasons).

and it’s not that i don’t care about the llms. i do care very much about them all ending in fiery pit of the deepest of hells.

great thanks

deleted by creator