courtesy @self

- preprint: https://arxiv.org/pdf/2309.02926

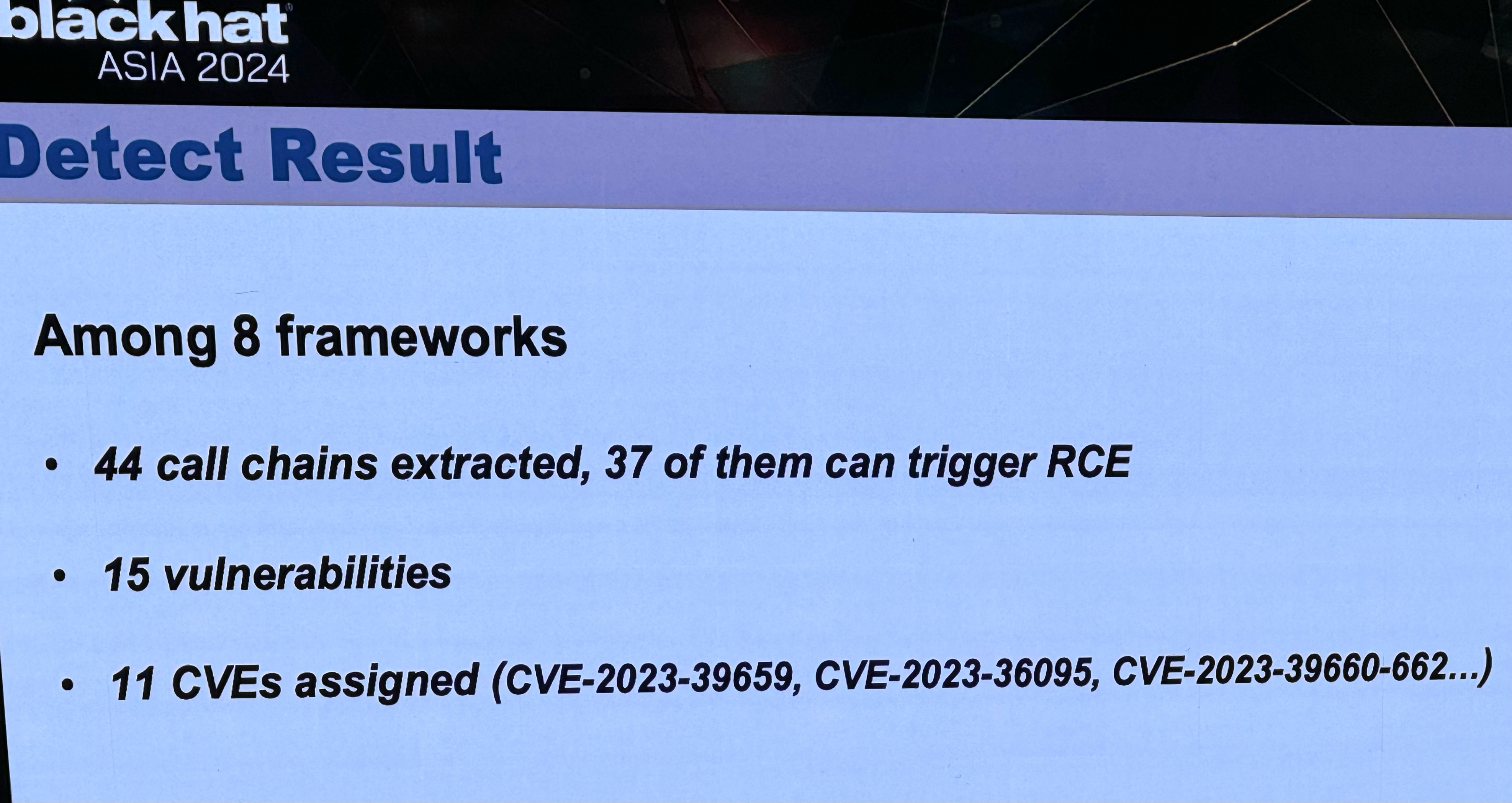

- blackhat abstract: https://www.blackhat.com/asia-24/briefings/schedule/index.html#llmshell-discovering-and-exploiting-rce-vulnerabilities-in-real-world-llm-integrated-frameworks-and-apps-37215

- Tong Liu’s related research: https://scholar.google.com/citations?hl=en&user=egWPi_IAAAAJ

can’t wait for the crypto spammers to hit every web page with a ChatGPT prompt. AI vs Crypto: whoever loses, we win

From reading the paper I’m not sure which is more egregious, the frameworks that pass code and/or use exec directly without checking, or the ones that rely on the LLM to do the checking (based on the fact that some of the CVEs require LLM prompt jailbreaking)

If you wanted to be exceedingly charitable, you could try and make the maintainers of said framework claim that “of course none of this should be used with unsanitized inputs open to the public, it’s merely a productivity boost tool that you would run on your own machine, don’t worry about possible prompts being evaluated by our agent from top bing results, don’t use this for anything REAL.”