BlueMonday1984

he/they

- 81 Posts

- 1.24K Comments

11·2 days ago

11·2 days agoI’ve seen memes about eating people’s art, but never a literal case, lmaoooooooooooo

131·3 days ago

131·3 days ago>zero-click android exploit

>arbitrary code execution and privilege escalation

Remember when the human was the weakest part of any cybersecurity system? Pepperidge Farms remembers.

13·3 days ago

13·3 days agoNewgrounds user turned Audio Moderator Quest has put together a recap of 2025 (text version), providing stats for how much slop she’s dealt with:

2025 Stats:

- 2818 AI-Generated Tracks Flagged or Removed

- 3656 Total Flagged or Removed Tracks

- 12.7 GB Data Used by AI-Generated Tracks

- 2843 Accounts Which Uploaded Prohibited Audio

Cumulative Stats (since 2024):

- 4475 AI-Generated Tracks Flagged or Removed

- 5731 Total Flagged or Removed Tracks

- 18.93 GB Data Used by AI-Generated Tracks

- 4113 Accounts Which Uploaded Prohibited Audio

AI Model Breakdown:

- Suno AI: 82%

- Udio AI: 5%

- Riffusion AI: 1%

- Other: 12%

- RVC-Based: 0.6%

- Soundful: 0.4%

- Mixed: 0.2%

- Various Other Models: 2.9%

- Unknown: 7.9%

Reportedly, she’s also got an essay-length sneer in the works:

Finally, I am also working on an even larger, long-form essay post about artificial intelligence, drawing a link to something that I do not see draw enough. It’s a big project with a lot of research and knowledgeable people guiding me. This will be released in the coming months. I have a lot to say.

9·3 days ago

9·3 days agoStarting off with a double bill of art-related sneers:

-

“Down with the Gatekeepers! Who…are Artists, Apparently” by Jared White, mocking promptfondlers’ attempts to cry gatekeeper and misunderstanding of the artistic process

-

“using chatgpt and other ai writing tools makes you unhireable. here’s why” by Doc Burford, going into punishing detail about LLMs’ artistic inadequacy, and promptfondlers’ artlessness

-

9·4 days ago

9·4 days agoNew post from Iris Meredith, doing a deep-dive into why tech culture was so vulnerable to being taken over by slop machines

71·6 days ago

71·6 days agoLike the bottle opener on a Galil, or the “Flower Field” version of Minesweeper, it can also help distinguish your story in small, but interesting ways, and help it stick in a reader’s mind. (Had to try this trick for myself :P)

10·7 days ago

10·7 days agoIt was floated last year, and its happened today - Curl is euthanising its bug bounty program, and AI is nigh-certainly why.

6·7 days ago

6·7 days agoSimon Willison defends stealing a Python library using lying machines, answering “questions” he previously “asked” in an attempt to downplay his actions.

5·7 days ago

5·7 days agoFound a solid sneer on the 'net today: https://chronicles.mad-scientist.club/tales/on-floss-and-training-llms/

7·9 days ago

7·9 days agoA small list of literary promptfondlers came to my attention - should complement the awful.systems slopware list nicely.

9·12 days ago

9·12 days agoIn a frankly hilarious turn of events, an award-winning isekai novel had its planned book publication and manga adaptation shitcanned after it was discovered to be AI slop.

The offending isekai is still up on AlphaPolis (where it originally won said award) as of this writing. Given its isekai and AI slop, expect some primo garbage.

4·13 days ago

4·13 days agoAnyway, I can recommend skipping this episode and only bothering with the technical or more business oriented ones, which are often pretty good.

AI puffery is easy for anyone to see through. If they’re regularly mistaking for something of actual substance, their technical/business sense is likely worthless, too.

11·13 days ago

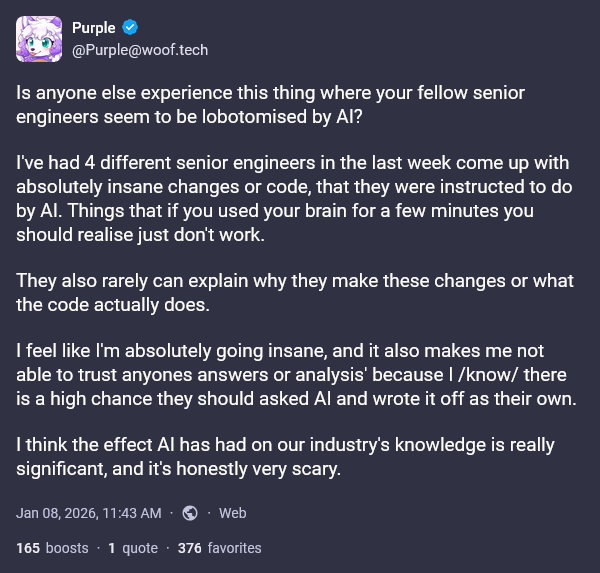

11·13 days agoFound someone showing some well-founded concern over the state of programming, and decided to share it before heading off to bed:

alt text:

Is anyone else experience this thing where your fellow senior engineers seem to be lobotomised by AI?

I’ve had 4 different senior engineers in the last week come up with absolutely insane changes or code, that they were instructed to do by AI. Things that if you used your brain for a few minutes you should realise just don’t work.

They also rarely can explain why they make these changes or what the code actually does.

I feel like I’m absolutely going insane, and it also makes me not able to trust anyones answers or analysis’ because I /know/ there is a high chance they should asked AI and wrote it off as their own.

I think the effect AI has had on our industry’s knowledge is really significant, and it’s honestly very scary.

6·14 days ago

6·14 days agoThe OpenAI Psychosis Suicide Machine now has a medical spinoff, which automates HIPAA violations so OpenAI can commit medical malpractice more efficiently

31·14 days ago

31·14 days agoI’d personally just overthrow the US government and make it a British colony once more /j

8·14 days ago

8·14 days agoso now there’s even less accountability than before

How can you get less than zero accountability?

11·15 days ago

11·15 days agoI’m so sorry to hear that.

12·15 days ago

12·15 days agoSFGATE columnist Drew Magary has decreed “The time has come to declare war on AI”, and put out a pretty solid sneer in the process. Bonus points for openly sneering billionaire propaganda service Ground News, too.

Ran across a thread about tech culture’s vulnerabilty to slop machines recently. Dovetails nicely with Iris Meredith’s recent article about the same issue, I feel.